Who am I?

Stable-Baselines

bert

David (aka HASy)

German Aerospace Center (DLR)

Why learn directly on real robots?

Simulation is all you need?

Simulation is all you need? (bis)

Why learn directly on real robots?

- because you can! (software/hardware)

- simulation is safer, faster

- simulation to reality (sim2real): accurate model and randomization needed

- challenges: robot safety, sample efficiency

Knowledge guided RL

- knowledge about the task (frozen encoder)

- knowledge about the robot (neck)

- RL for improved robustness (CPG + RL)

Smooth Exploration for Robotic RL

Learning to drive in minutes

Learning to race in an hour

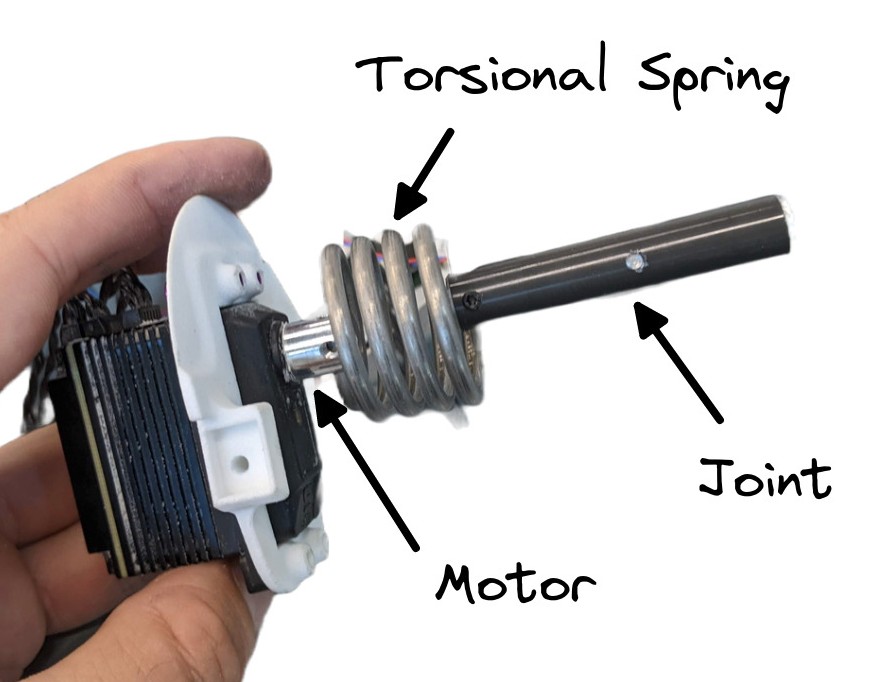

The elastic quadruped bert

- Mass: 3.1kg

- Spring stiffness: 2.75 Nm/rad

- Max motor torque: 4 Nm

RL from scratch (bert)

RL from scratch (3 years later)

Using SB3 + Jax = SBX: https://github.com/araffin/sbx

Learning to Exploit Elastic Actuators for Quadruped Locomotion

Raffin et al. "Learning to Exploit Elastic Actuators for Quadruped Locomotion" In preparation RA-L, 2023.

Teaser

Another bert

Multiple gaits

Central Pattern Generator (CPG)

Coupled oscillator

\[\begin{aligned}

\dot{r_i} & = a (\mu - r_i^2)r_i \\

\dot{\varphi_i} & = \omega + \sum_j \, r_j \, c_{ij} \, \sin(\varphi_j - \varphi_i - \Phi_{ij}) \\

\end{aligned} \]

Variables

\[\begin{aligned}

\mu, \omega: \text{desired radius, pulsation} \\

\Phi_{ij}: \text{phase shift between oscillators} \\

\end{aligned} \]

Desired foot position

\[\begin{aligned}

x_{des,i} &= \textcolor{#5f3dc4}{\Delta x_\text{len}} \cdot r_i \cos(\varphi_i)\\

z_{des,i} &= \Delta z \cdot \sin(\varphi_i) \\

\Delta z &= \begin{cases}

\textcolor{#5c940d}{\Delta z_\text{clear}} &\text{if $\sin(\varphi_i) > 0$ (\textcolor{#0b7285}{swing})}\\

\textcolor{#d9480f}{\Delta z_\text{pen}} &\text{otherwise (\textcolor{#862e9c}{stance}).}

\end{cases}

\end{aligned} \]

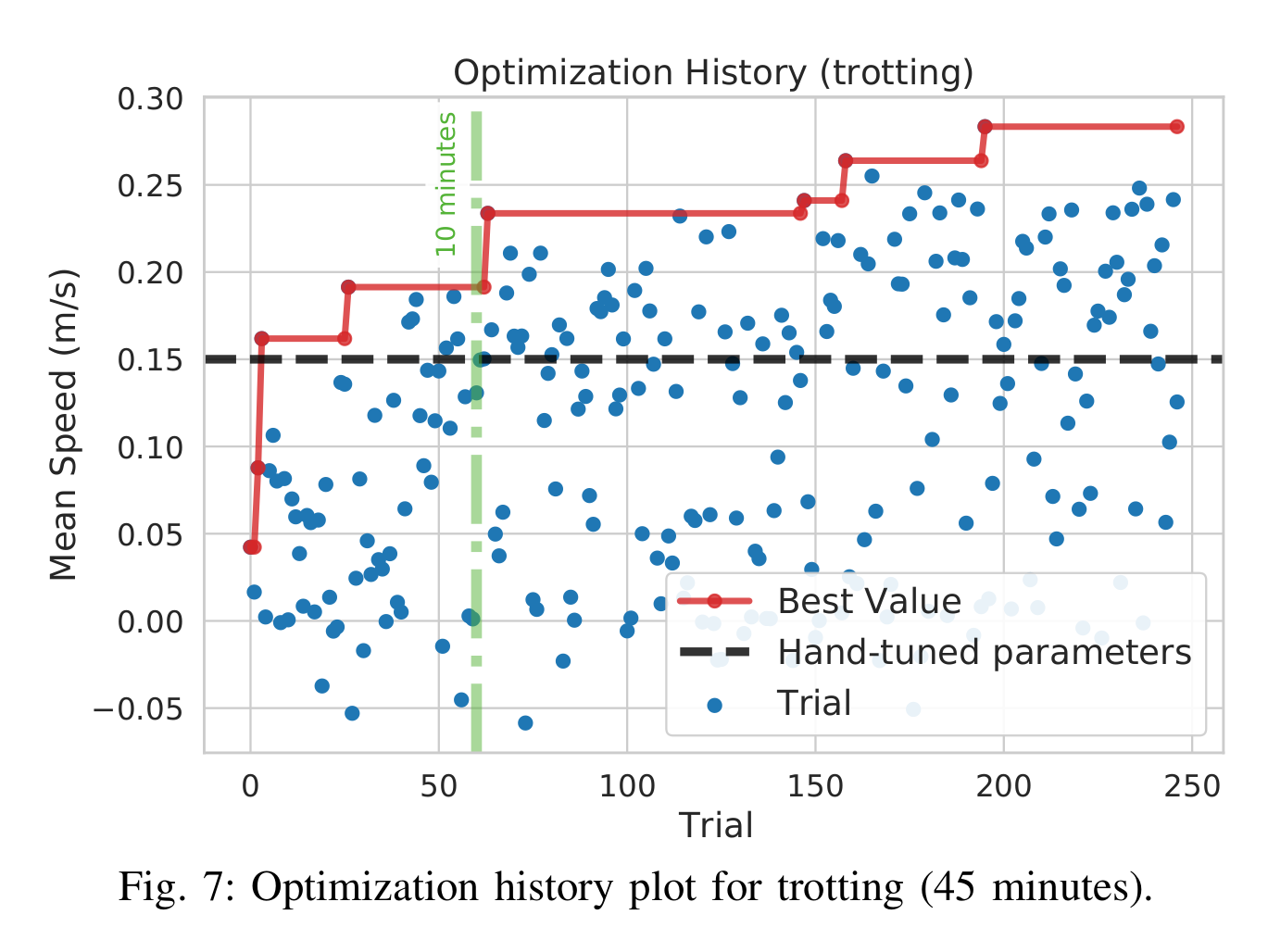

Black Box Optimization (BBO)

Variables

\[\begin{aligned}

\alpha = \{\textcolor{#5c940d}{\Delta z_\text{clear}},

\textcolor{#d9480f}{\Delta z_\text{pen}},

\textcolor{#5f3dc4}{\Delta x_\text{len}},

\textcolor{#0b7285}{\omega_\text{swing}},

\textcolor{#862e9c}{\omega_\text{stance}}

\}.

\end{aligned} \]

BBO

\[\begin{aligned}

\alpha^* = \argmax {f(\alpha)}.

\end{aligned} \]

Fitness function

\[\begin{aligned}

f: \text{mean forward speed}.

\end{aligned} \]

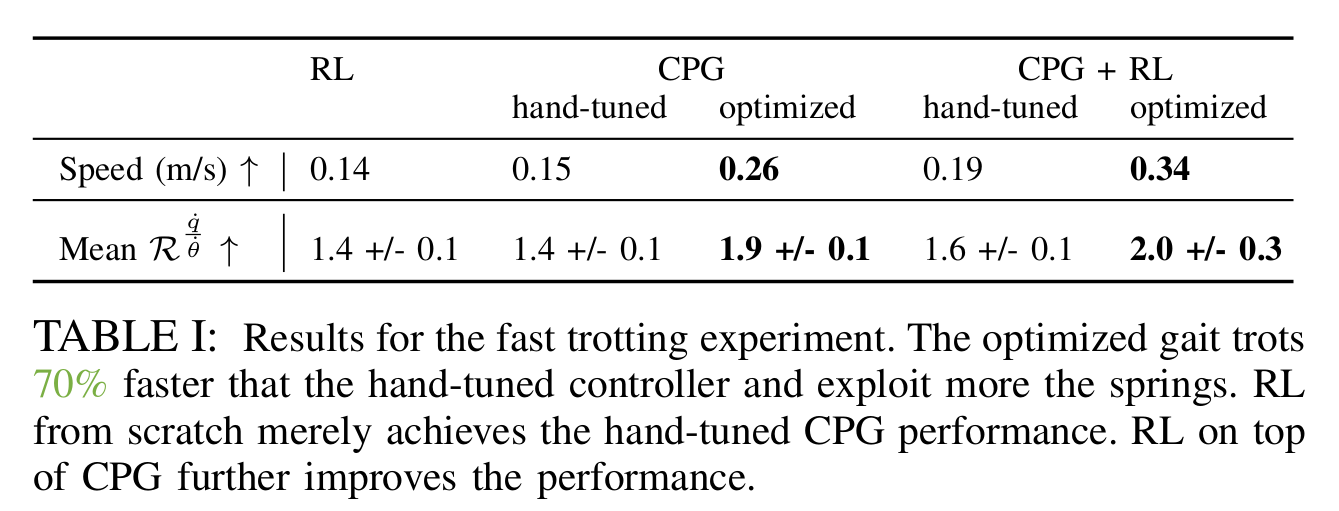

Result

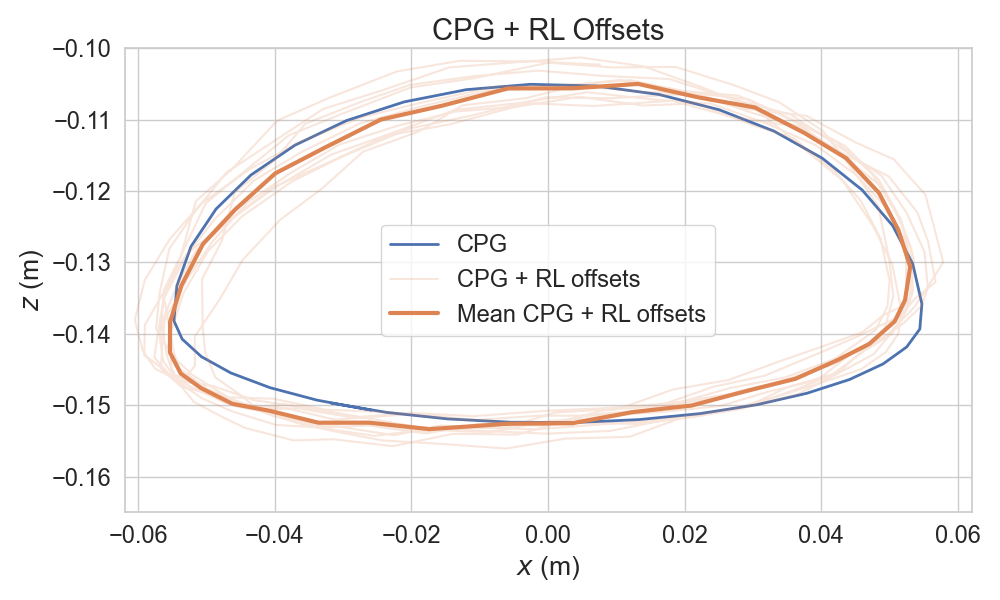

Closing the loop with RL

desired position = open-loop foot position + closed-loop control with RL

\[\begin{aligned}

x_{des,i} &= \textcolor{#a61e4d}{\Delta x_\text{len} \cdot r_i \cos(\varphi_i)} + \textcolor{#1864ab}{\pi_{x,i}(s_t)} \\

z_{des,i} &= \textcolor{#a61e4d}{\Delta z \cdot r_i \sin(\varphi_i)} + \textcolor{#1864ab}{\pi_{z,i}(s_t)}

\end{aligned} \]

Video

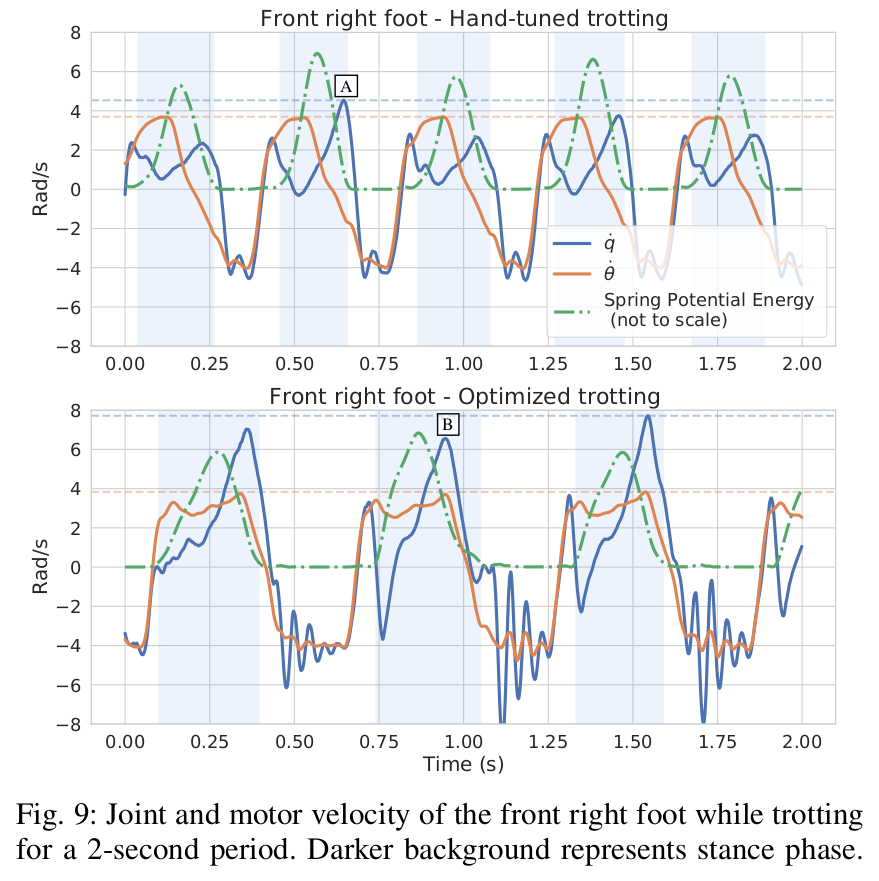

Fast trot task

Trot comparison

Learning to Exploit Elastic Actuators

Conclusion

- learn directly on the real robot

- exploit elastic actuators

- model-free, works on multiple robots

- RL from scratch is not enough