Enabling Reinforcement Learning

on Real Robots

RL 101

Motivation

Learning directly on real robots

Simulation to reality

In the papers...

Rudin, Nikita, et al. "Learning to walk in minutes using massively parallel deep reinforcement learning." CoRL, 2021.

Simulation to reality (2)

...in reality.

Duclusaud, Marc, et al. "Extended Friction Models for the Physics Simulation of Servo Actuators." (2024)

ISS Experiment (1)

Credit: ESA/NASA

ISS Experiment (2)

Before

After, with the 1kg arm

Can it turn?

Can it still turn?

Outdoor

Challenges of real robot training

- (Exploration-induced) wear and tear

- Sample efficiency

➜ one robot, manual resets - Real-time constraints

➜ no pause, no acceleration, multi-day training - Computational resource constraints

Outline

- Reliable Software Tools for RL

- Smooth Exploration

- Integrating Pose Estimation

- Combining Oscillators and RL

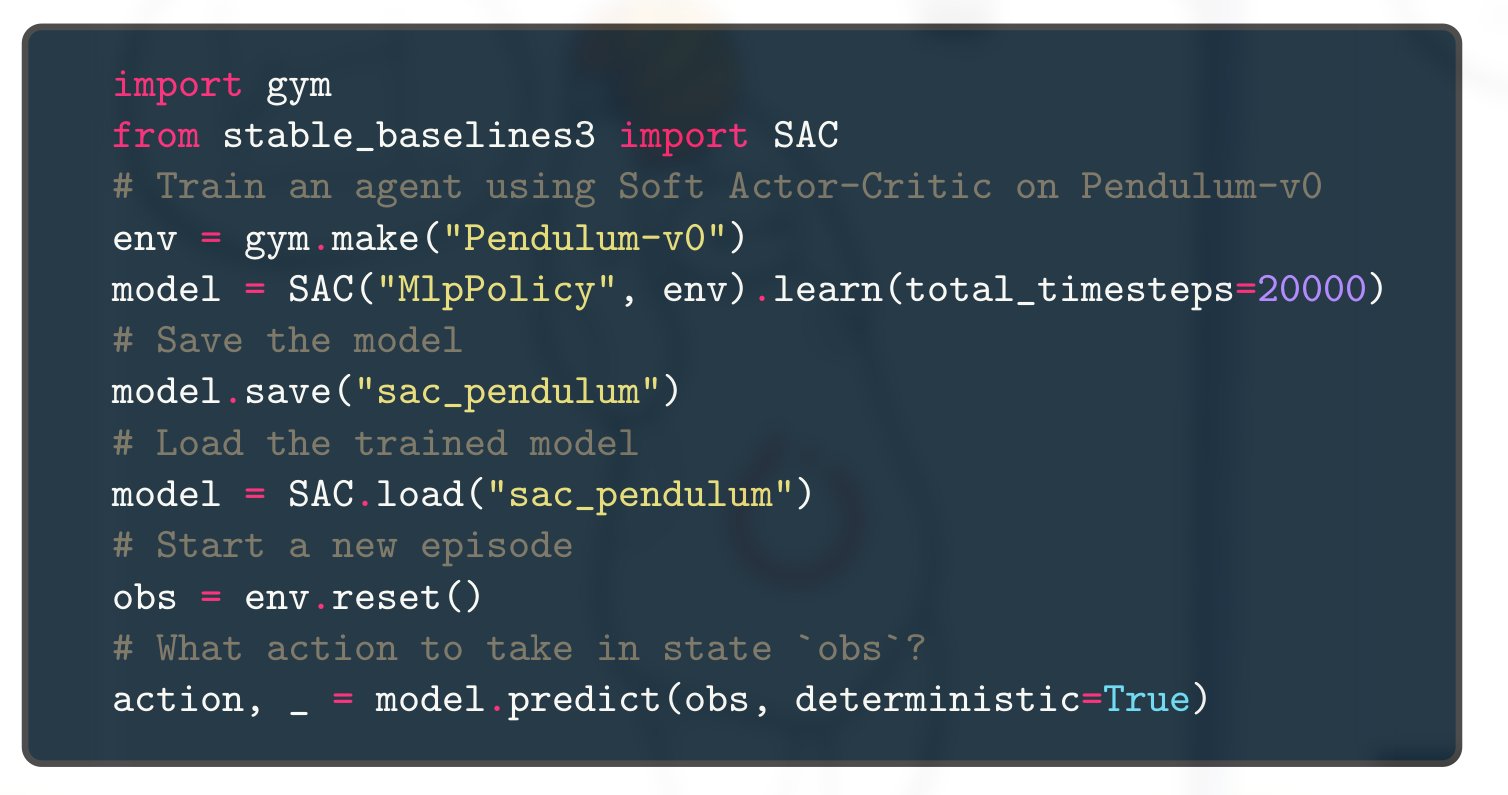

Stable-Baselines3 (SB3)

https://github.com/DLR-RM/stable-baselines3

Raffin, Antonin, et al. "Stable-baselines3: Reliable reinforcement learning implementations." JMLR (2021)

Reliable Implementations?

- Performance checked

- Software best practices (96% code coverage, type checked, ...)

- Active community (9000+ stars, 2700+ citations, 7M+ downloads)

- Fully documented

Reproducible Reliable RL: SB3 + RL Zoo

RL Zoo: Reproducible Experiments

- Training, loading, plotting, hyperparameter optimization

- Everything that is needed to reproduce the experiment is logged

- 200+ trained models with tuned hyperparameters

SBX: A Faster Version of SB3

Stable-Baselines3 (PyTorch) vs SBX (Jax)

Recent Advances: DroQ

More gradient steps: 4x more sample efficient!

RL from scratch in 10 minutes

Using SB3 + Jax = SBX: https://github.com/araffin/sbx

- Reliable Software Tools for RL

- Smooth Exploration

- Integrating Pose Estimation

- Combining Oscillators and RL

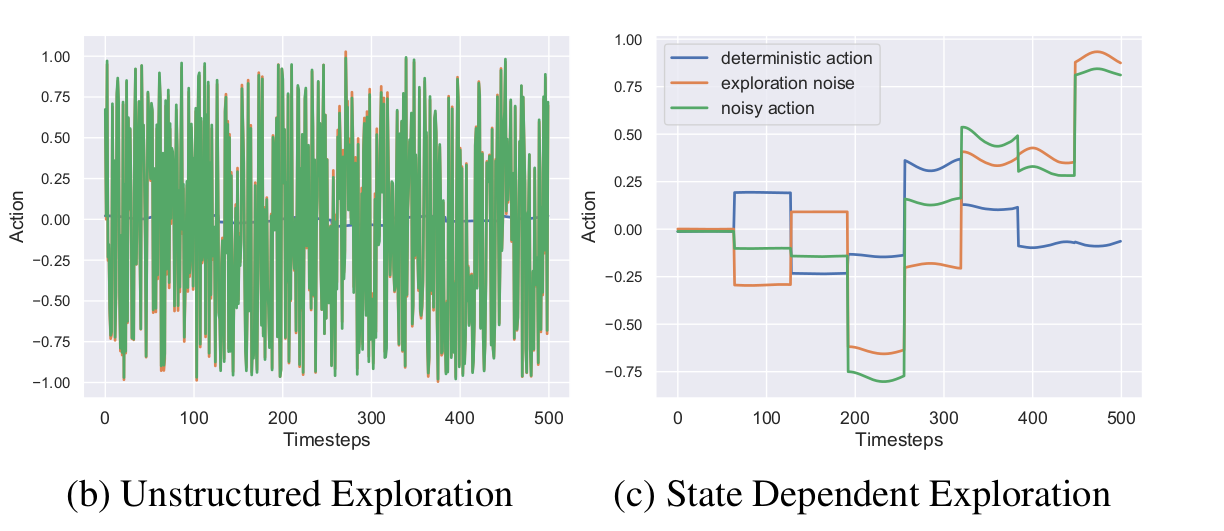

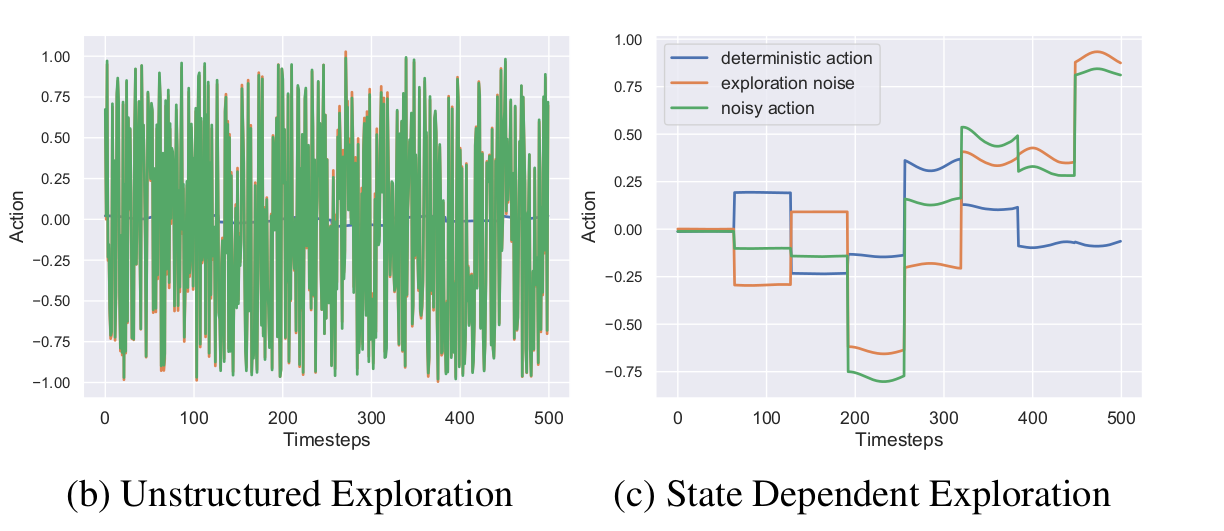

Smooth Exploration for Robotic RL

Raffin, Antonin, Jens Kober, and Freek Stulp. "Smooth exploration for robotic reinforcement learning." CoRL. PMLR, 2022.

generalized State-Dependent Exploration (gSDE)

Trade-off between

return and continuity cost

Results

- Reliable Software Tools for RL

- Smooth Exploration

- Integrating Pose Estimation

- Combining Oscillators and RL

Fault-tolerant Pose Estimation

Raffin, Antonin, Bastian Deutschmann, and Freek Stulp. "Fault-tolerant six-DoF pose estimation for tendon-driven continuum mechanisms." Frontiers in Robotics and AI, 2021.

Method

Integrating Pose Estimation with RL

Neck Control Results

Pose Prediction Results

- Reliable Software Tools for RL

- Smooth Exploration

- Integrating Pose Estimation

- Combining Oscillators and RL

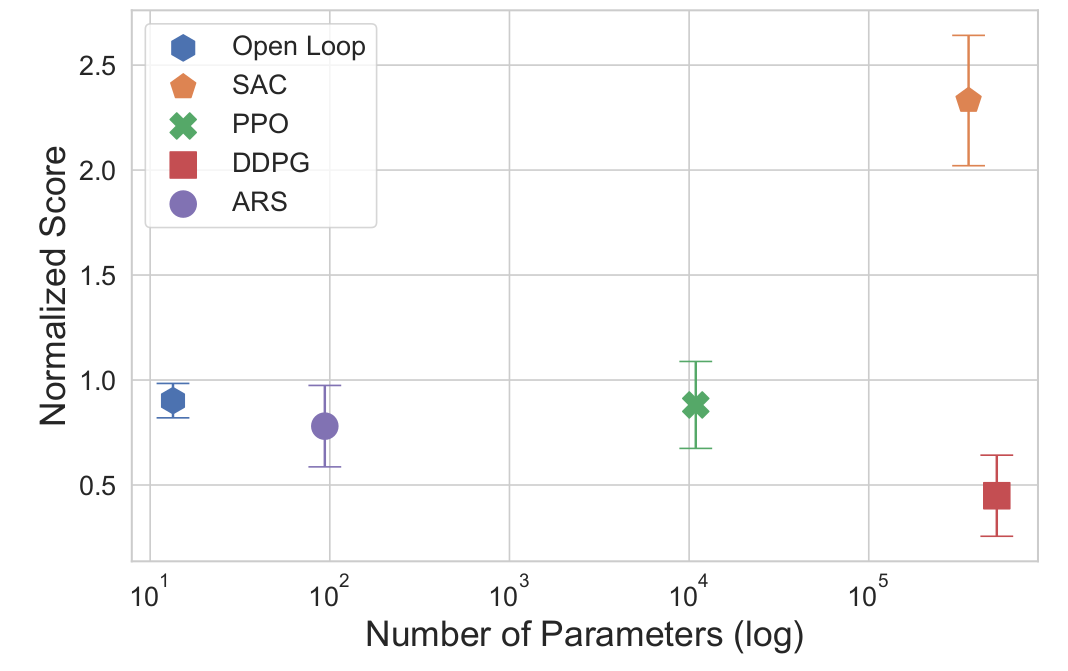

An Open-Loop Baseline for RL Locomotion Tasks

Raffin et al. "An Open-Loop Baseline for Reinforcement Learning Locomotion Tasks", RLJ 2024.

Periodic Policy

Cost of generality vs prior knowledge

Combining Open-Loop Oscillators with RL

Learning to Exploit Elastic Actuators

Raffin et al. "Learning to Exploit Elastic Actuators for Quadruped Locomotion" 2023.

Challenges of real robot training (2)

-

(Exploration-induced) wear and tear

➜ smooth exploration, feedforward controller, open-loop oscillators -

Sample efficiency

➜ prior knowledge, recent algorithms -

Real-time constraints

➜ fast implementations, reproducible experiments -

Computational resource constraints

➜ open-loop oscillators, deploy with ONNX, fast pose estimation

Conclusion

- High quality software

- Safer exploration

- Leverage prior knowledge

- Future: pre-train in sim, fine-tune on real hardware?

Questions?

Backup Slides

Additional Video

2nd Mission

Before

After, new arm position + magnet

Broken leg

Elastic Neck

Raffin, Antonin, Jens Kober, and Freek Stulp. "Smooth exploration for robotic reinforcement learning." CoRL. PMLR, 2022.

Pose Estimation Results

Simulation is all you need?

Parameter efficiency?

Plotting

python -m rl_zoo3.cli all_plots -a sac -e HalfCheetah Ant -f logs/ -o sac_results

python -m rl_zoo3.cli plot_from_file -i sac_results.pkl -latex -l SAC --rliable

RL Zoo: Reproducible Experiments

- Training, loading, plotting, hyperparameter optimization

- W&B integration

- 200+ trained models with tuned hyperparameters

In practice

# Train an SAC agent on Pendulum using tuned hyperparameters,

# evaluate the agent every 1k steps and save a checkpoint every 10k steps

# Pass custom hyperparams to the algo/env

python -m rl_zoo3.train --algo sac --env Pendulum-v1 --eval-freq 1000 \

--save-freq 10000 -params train_freq:2 --env-kwargs g:9.8

sac/

└── Pendulum-v1_1 # One folder per experiment

├── 0.monitor.csv # episodic return

├── best_model.zip # best model according to evaluation

├── evaluations.npz # evaluation results

├── Pendulum-v1

│ ├── args.yml # custom cli arguments

│ ├── config.yml # hyperparameters

│ └── vecnormalize.pkl # normalization

├── Pendulum-v1.zip # final model

└── rl_model_10000_steps.zip # checkpoint